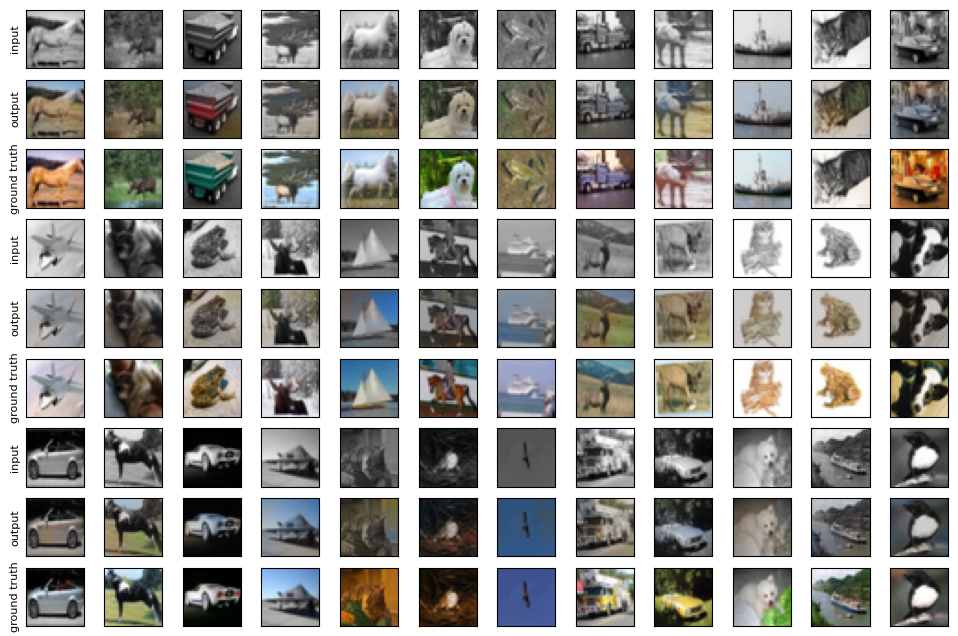

Colorization with CIFAR-10

An AutoEncoder-based image-coloring method.

This project was completed by myself.

Model

-

Input: grayscale image with 3 channels

-

Output: RGB image with 3 channels

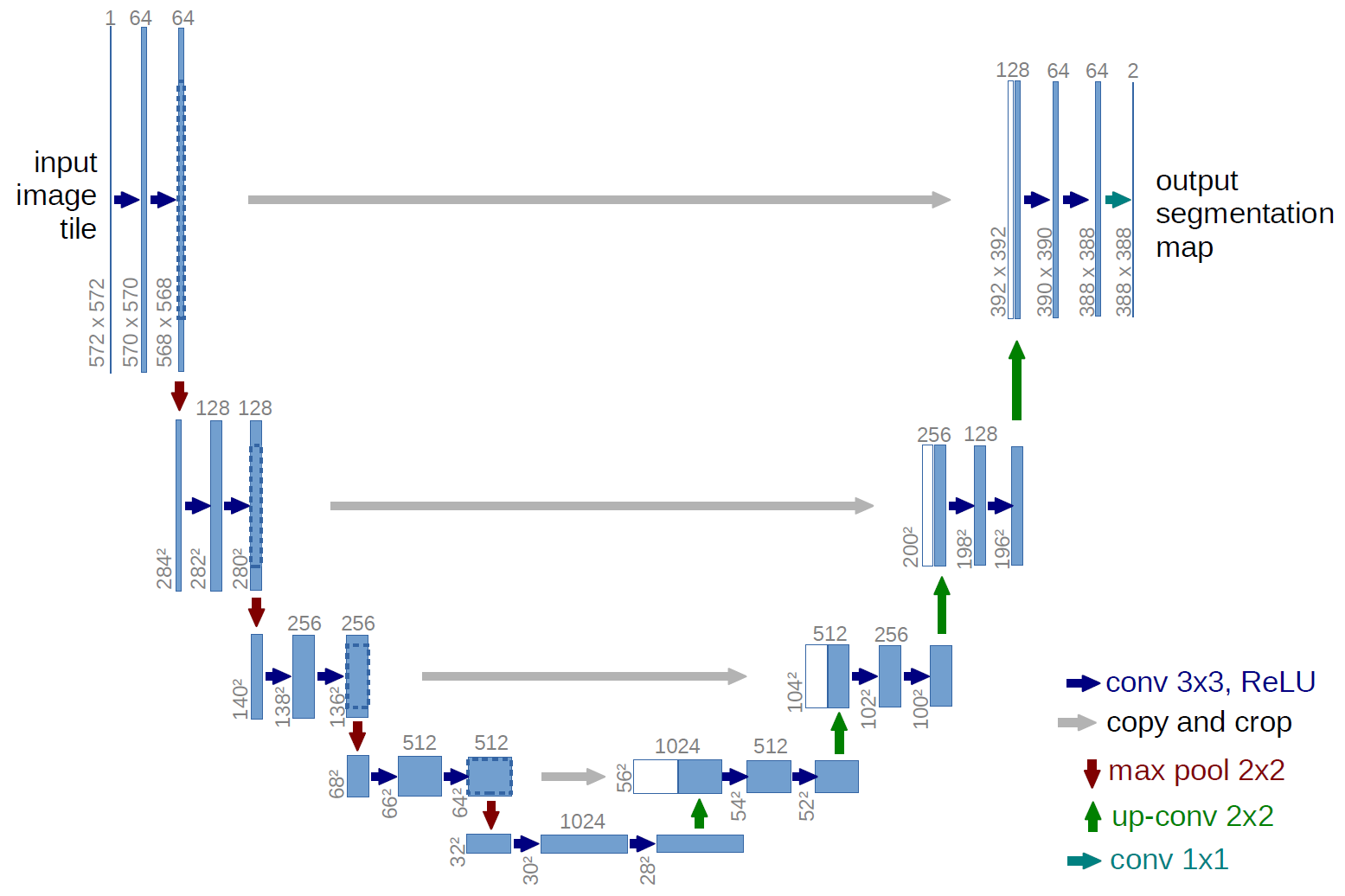

Note: The colorization task is expected to be completed by an autoencoder. Thus, U-Net is chosen as the architecture of the network.

This task is based on Autoencoder model. Which is consisted of an encoder and a decoder. The dataloader is self-defined in order to load the grayscale images and their original images. Each record is made up of a grayscale image and original image pair. The neural network takes in a grayscale image as input and then output a colorized one.

The input grayscale image also has 3 channels but with exactly the same value for each pixel. Actually, this is not so meaningful afterwards, it can still be modified to be a one-dimensional input.

U-Net

The network is based on the frame of U-Net. It is connected on the convolutional layers and deconvolutional layers. This ensures that the information will not miss too much after down-sampling and up-sampling.

There are a dropout layer and a batchnorm layer after each Maxpool and each ConvTranspose.

[Conv->Conv->MaxPool] is performed two times instead of four to still have a reasonable resolution at the lowest part of the architecture.

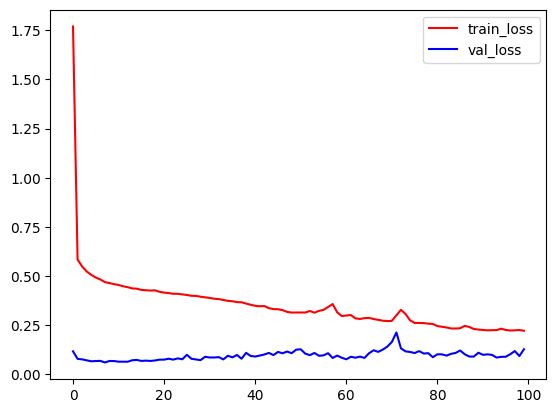

Training

The training process can be visualised as the figure shown below:

For more information, see the work in my github repository!